Why Anomaly Detection?

Machine Learning has four common classes of applications: classification, predicting next value, anomaly detection, and discovering structure. Among them, Anomaly detection detects data points in data that does not fit well with the rest of the data. It has a wide range of applications such as fraud detection, surveillance, diagnosis, data cleanup, and predictive maintenance.

Machine Learning has four common classes of applications: classification, predicting next value, anomaly detection, and discovering structure. Among them, Anomaly detection detects data points in data that does not fit well with the rest of the data. It has a wide range of applications such as fraud detection, surveillance, diagnosis, data cleanup, and predictive maintenance.

Although it has been studied in detail in academia, applications of anomaly detection have been limited to niche domains like banks, financial institutions, auditing, and medical diagnosis etc. However, with the advent of IoT, anomaly detection would likely to play a key role in IoT use cases such as monitoring and predictive maintenance.

This post explores what is anomaly detection, different anomaly detection techniques, discusses the key idea behind those techniques, and wraps up with a discussion on how to make use of those results.

Is it not just Classification?

The answer is yes if the following three conditions are met.

- You have labeled training data

- Anomalous and normal classes are balanced ( say at least 1:5)

- Data is not autocorrelated. ( That one data point does not depend on earlier data points. This often breaks in time series data).

If all of above is true, we do not need an anomaly detection techniques and we can use an algorithm like Random Forests or Support Vector Machines (SVM).

However, often it is very hard to find training data, and even when you can find them, most anomalies are 1:1000 to 1:10^6 events where classes are not balanced. Moreover, the most data, such as data from IoT use cases, would be autocorrelated.

Another aspect is that the false positives are a major concern as we will discuss under acting on decisions. Hence, the precision ( given model predicted an anomaly, how likely it is to be true) and recall (how much anomalies the model will catch) trade-offs are different from normal classification use cases. We will discuss this in detail later.

What is Anomaly Detection?

Anomalies or outliers come in three types.

- Point Anomalies. If an individual data instance can be considered as anomalous with respect to the rest of the data (e.g. purchase with large transaction value)

- Contextual Anomalies, If a data instance is anomalous in a specific context, but not otherwise ( anomaly if occur at a certain time or a certain region. e.g. large spike at the middle of the night)

- Collective Anomalies. If a collection of related data instances is anomalous with respect to the entire dataset, but not individual values. They have two variations.

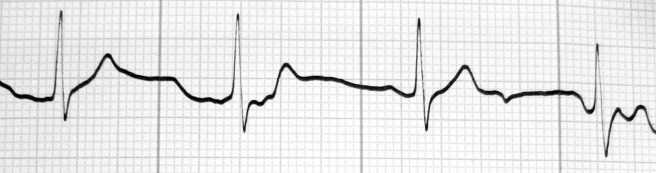

- Events in unexpected order ( ordered. e.g. breaking rhythm in ECG)

- Unexpected value combinations ( unordered. e.g. buying a large number of expensive items)

In the next section, we will discuss in detail how to handle the point and collective anomalies. Contextual anomalies are calculated by focusing on segments of data (e.g. spatial area, graphs, sequences, customer segment) and applying collective anomaly techniques within each segment independently.

Anomaly Detection Techniques

Anomaly detection can be approached in many ways depending on the nature of data and circumstances. Following is a classification of some of those techniques.

Static Rules Approach

The most simple, and maybe the best approach to start with, is using static rules. The Idea is to identify a list of known anomalies and then write rules to detect those anomalies. Rules identification is done by a domain expert, by using pattern mining techniques, or a by combination of both.

Static rules are used with the hypothesis that anomalies follow the 80/20 rule where most anomalous occurrences belong to few anomaly types. If the hypothesis is true, then we can detect most anomalies by finding few rules that describe those anomalies.

Implementing those rules can be done using one of three following methods.

- If they are simple and no inference is needed, you can code them using your favorite programming language

- If decisions need inference, then you can use a rule-based or expert system (e.g. Drools)

- If decisions have temporal conditions, you can use a Complex Event Processing System (e.g. WSO2 CEP, Esper)

Although simple, static rules-based systems tend to be brittle and complex. Furthermore, identifying those rules is often a complex and subjective task. Therefore, statistical or machine learning based approach, which automatically learns the general rules, are preferred to static rules.

When we have Training Data

Anomalies are rare under most conditions. Hence, even when training data is available, often there will be few dozen anomalies exists among millions of regular data points. The standard classification methods such as SVM or Random Forest will classify almost all data as normal because doing that will provide a very high accuracy score (e.g. accuracy is 99.9 if anomalies are one in thousand).

Generally, the class imbalance is solved using an ensemble built by resampling data many times. The idea is to first create new datasets by taking all anomalous data points and adding a subset of normal data points (e.g. as 4 times as anomalous data points). Then a classifier is built for each data set using SVM or Random Forest, and those classifiers are combined using ensemble learning. This approach has worked well and produced very good results.

If the data points are autocorrelated with each other, then simple classifiers would not work well. We handle those use cases using time series classification techniques or Recurrent Neural networks.

When there is no Training Data

If you do not have training data, still it is possible to do anomaly detection using unsupervised learning and semi-supervised learning. However, after building the model, you will have no idea how well it is doing as you have nothing to test it against. Hence, the results of those methods need to be tested in the field before placing them in the critical path.

No Training Data: Point Anomalies

Point anomalies will only have one field in the data set. We use percentiles to detect point anomalies with numeric data and histograms to detect Detecting point anomalies in categorical data. Either case, we find rare data ranges or field values from the data and predict those as anomalies if it happens again. For example, if 99.9 percentile of my transaction value is 800$, one can guess any transaction greater than that value as the potential anomaly. When building models, often we use moving averages instead of point values when possible as they are much more stable to noise.

No Training Data: Univariate Collective Outliers

Time series data are the best examples of collective outliers in a univariate dataset. In this case, anomalies happen because values occur in unexpected order. For example. the third heart beat might be anomalous not because values are out of range, but they happen in a wrong order.

There are three several approaches to handle these use cases.

Solution 1: build a predictor and look for outliers using residues: This is based on the heuristic that the values not explained by the model are anomalies. Hence we can build a model to predict the next value, and then apply percentiles on the error ( predicted value – actual value) as described before. The model can be built using regression, time series models, or Recurrent Neural Networks.

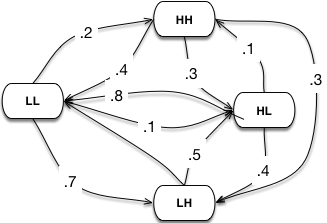

Solution 2: Markov chains and Hidden Markov chains can measure the probability of a sequence of events happening. This approach builds a Markov chain for the underline process, and when a sequence of events has happened, we can use the Markov Chain to measure the probability of that sequence occurring and use that to detect any rare sequences.

For example, let’s consider credit card transactions. To model the transactions using Markov chains, let’s represent each transaction using two values: transaction value (L, H) and time since the last transaction (L, H). Since Markov chain’s states have to be finite, we will choose two values Low (L), High (H) to represent variable values. Then Markov chains would represent by states LL, LH, HL, HH and each transaction would be a transition from one state to another state. We can build the Markov chain using historical data and use the chain to calculate sequence probabilities. Then, we can find the probability of any new sequence happening and then mark rare sequences as anomalies. The blog post “Real Time Fraud Detection with Sequence Mining” describes this approach in detail.

For example, let’s consider credit card transactions. To model the transactions using Markov chains, let’s represent each transaction using two values: transaction value (L, H) and time since the last transaction (L, H). Since Markov chain’s states have to be finite, we will choose two values Low (L), High (H) to represent variable values. Then Markov chains would represent by states LL, LH, HL, HH and each transaction would be a transition from one state to another state. We can build the Markov chain using historical data and use the chain to calculate sequence probabilities. Then, we can find the probability of any new sequence happening and then mark rare sequences as anomalies. The blog post “Real Time Fraud Detection with Sequence Mining” describes this approach in detail.

No Training Data: Multivariate Collective Outliers ( Unordered)

Here data have multiple reading but does not have an order. For example, vitals collected from many people are such a multi-variate but not ordered dataset. For example, higher temperatures and slow heartbeats might be an anomaly even though both temperature and heartbeats by itself are in a normal range.

Approach 1: Clustering – the underline assumption in the first approach is that if we cluster the data, normal data will belong to clusters while anomalies will not belong to any clusters or belong to small clusters.

Then to detect anomalies we will cluster the data, and calculate the centroids and density of each cluster found. When we receive a new data point, we calculate the distance from the new data point to known large clusters and if it is too far, then decide it as an anomaly.

Furthermore, we can improve upon the above approach by first manually inspecting ranges of each cluster and labeling each cluster as anomalous or normal and use that while doing anomaly check for a data point.

Approach 2: Nearest neighbor techniques – the underline assumption is new anomalies are closer to known anomalies. This can be implemented by using distance to k-anomalies or using the relative density of other anomalies near the new data point. While calculating the above, with numerical data, we will break the space into hypercubes, and with categorical data, we will break the space into bins using histograms. Both these approaches are described in ACM Computing Survey paper “Anomaly Detection: A Survey” in detail.

No Training Data: Multivariate Collective Outliers ( Ordered)

This class is most general and consider ordering as well as value combinations. For example, consider a series of vital readings taken from the same patient. Some reading may be normal in combination but anomalous as combinations happen in wrong order. For example, given a reading that has the blood pressure, temperature, and heart beat frequency, each reading by itself may be normal, but not normal if it oscillates too fast in a short period of time.

Combine Markov Chains and Clustering – This method combines clustering and Markov Chains by first clustering the data, and then using clusters as the states in a Markov Chain and building a Markov Chain. Clustering will capture common value combinations and Markov chains will capture their order.

Other Techniques

There are several other techniques that have been tried out, and following are some of them. Please see Anomaly Detection: A Survey for more details.

Information Theory: The main idea is that anomalies have high information content due to irregularities, and this approach tries to find a subset of data points that have highest irregularities.

Dimension Reduction: The main idea is that after applying dimension reduction, a normal data can be easily expressed as a combination of dimensions while anomalies tend to create complex combinations.

Graph Analysis: Some processes would have interaction between different players. For example, money transfers would create a dependency graph among participants. Flow analysis of such graphs might show anomalies. On some other use cases such as insurance, stock markets, corporate payment fraud etc, similarities between player’s transactions might suggest anomalous behavior. Using PageRank to Detect Anomalies and Fraud in Healthcare and “New ways to detect fraud” white paper by Neo4j are examples of these use cases.

Comparing Models and Acting on Results

With anomaly detection, it is natural to think that the main goal is to detect all anomalies. However, it is often a mistake.

The book, “Statistics done Wrong”, has a great example demonstrating the problem. Let’s consider there are 10,000 patients and 9 of them have breast cancer. There is a test ( a model) that detect cancer, which will capture 80% of patients who have cancer (true positives). However, it says yes for 9% of healthy patients ( false positives).

This can be represented by following confusion matrix.

| Healthy | Not Healthy | |

| Predicted Healthy | 9091 | 1 |

| Predicted Not Healthy | 900 | 8 |

In this situation, when the test says someone has cancer, actually he does not have cancer at 99% of the time. So the test is useless. If we go detecting all anomalies, we might create a similar situation.

If you ignore this problem, it can cause harm in multiple ways.

- Reduce trust in the system – when people lost the trust, it will take a lot of threats and red tape to make them trust it

- Might do harm than good – in above example, emotional trauma and unnecessary tests might outweigh any benefits.

- Might be unfair (e.g. surveillance, arrest)

Hence, we must try to find a balance where we try to detect what we can while keeping model accuracy within acceptable limits.

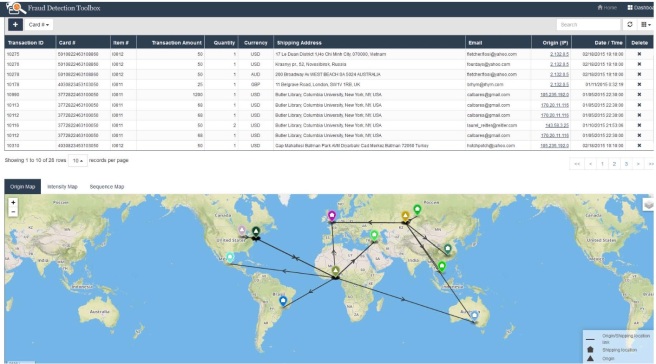

Another side of the same problem is that the models are only a suggestion for investigation, but not evidence for incriminating someone. This is another twist of Correlation vs. Causality. Therefore, results of the model must never be used as evidence and the investigator must find independent evidence of the problem (e.g. Credit card Fraud).

Due to both these reasons, it is paramount that investigator should be able to see the anomaly within context to verify it and also to find evidence that something is amiss. For example, in WSO2 Fraud Detection solution, investigators could click on the fraud alert and see the data point within the context of other data as shown below.

Furthermore, with the techniques like static rules and unsupervised methods, it is harder to predict how much alerts the techniques might lead to. For example, it is not useful for 10 person team to receive thousands of alerts. We can handle this problem by tracking percentiles on anomaly score and only triggering on the top 1% of the time. If the considered set is very big we can use a percentile approximation technique like t-digest (e.g. https://www.mapr.com/blog/better-anomaly-detection-t-digest-whiteboard-walkthrough).

Finally, we must pay attention to what investigators did with the alerts and improve their experience. For example, providing auto-silencing of repeated alerts and alert digests etc are ways to provide more control to the investigators.

Tools & Datasets

Anomaly Detection is mostly done with custom code and proprietary solutions. Hence, it’s applications has been limited to few high-value use cases. If you are looking for an open source solution, following are some options.

- WSO2 has been working on Fraud Detection tool built on top of WSO2 Data Analytics Platform ( Disclaimer: I am part of that team). It is free under Apache Licence. You can find more information about http://wso2.com/analytics/solutions/fraud-and-anomaly-detection-solution/.

- Kale (https://github.com/etsy/skyline) and Thyme by Etasy provide support for time series based anomaly detection. See https://codeascraft.com/2013/06/11/introducing-kale/

- There are several samples done on top of other products such as

Finally, there are only a few datasets in the public domain that can be used to test anomaly detection problems. This limits the development of those techniques. Following are those I know about.

- KDD cup 99 intrusion detection dataset

- Single variable time series data sets by Numenta

- Breast Cancer dataset

- Yahoo Time Series Anomaly Detection Dataset

I think as a community we need to find more datasets as that will make it possible to compare and contrast different solutions.

Conclusion

In this post, we discussed anomaly detection, how it is different from machine learning, and then discussed different anomaly detection techniques

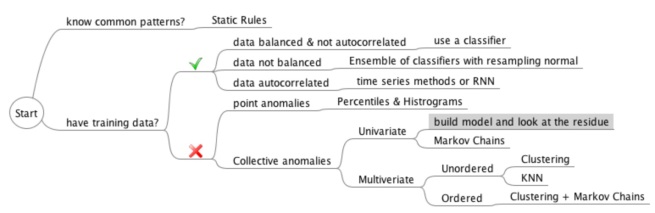

We categorized anomalies into three classes: point anomalies, contextual anomalies, and collective anomalies. Then we discussed some of the techniques for detecting them. The following picture shows a summary of those techniques.

You can find a detailed discussion of most of these techniques from the ACM Computing Survey paper “Anomaly Detection: A Survey“. Finally, the post discussed several of the pitfalls of trying to detect all anomalies and some tools.

Hope this was useful. If you have any thoughts or like to point out any other major techniques that I did not mention, please drop a comment.

Image Credit: http://www.statisticsdonewrong.com (CC Licence)

If you enjoyed this post you might also find following interesting.

- Rolling Window Regression: a Simple Approach for Time Series Next value Predictions

- Mastering the 4 Balancing Acts in Microservices Architecture

Reblogged this on russomi.

LikeLike

Thank you for sharing this, particularly for practical and operative style you gave to the content.

LikeLike

Reblogged this on vithuanmv and commented:

Very helpful introduction to anomaly detection!

LikeLike

Very useful and helpful to get the right perspective to evaluate options. Thanks for your effort, Srinath.

LikeLike

Really helpful, Thank you

LikeLike

Informative post!!

Thanks

LikeLike

Very nice

LikeLike

thanks

LikeLike

Translate and reblog on https://zhuanlan.zhihu.com/p/29784187

LikeLike

Translate and repost on https://zhuanlan.zhihu.com/p/29784187

LikeLike

The post is amazing! ( three thumbs up!)

LikeLike

Very useful post! One question though: is the breast cancer example correct? Is there 1000 or 10000 patients? If there is 1000 then the left column seems to be wrong if there is 10000 then the right. Am I missing something?

LikeLike

thanks, fixed it.

LikeLike

Hello, this is a nice post. Thanks for this.

I am working on an uncorrelated dataset, which has categorical data mostly. Would you have any suggestions for this case?

LikeLike

Could you explain what you meant by “uncorrelated dataset”?

LikeLike